NGA - S-ADM Metadata and Jünger Audio flexAI

NGA Metadata Format S-ADM and Jünger Audio flexAI

Serialized ADM (S-ADM) metadata describes the referenced audio to enable object-based Next Generation Audio (NGA) productions. This white paper introduces our concept to enable S-ADM production workflows in the Jünger Audio flexible audio infrastructure flexAI for Dolby® Atmos distribution. Various audio processors can be added and routed internally to set up different broadcast chains. These processors can run on the AIXpressor and a range of flexAIserver audio processing servers. This allows for meeting various processing power and interfacing capability requirements. Examples of applications:

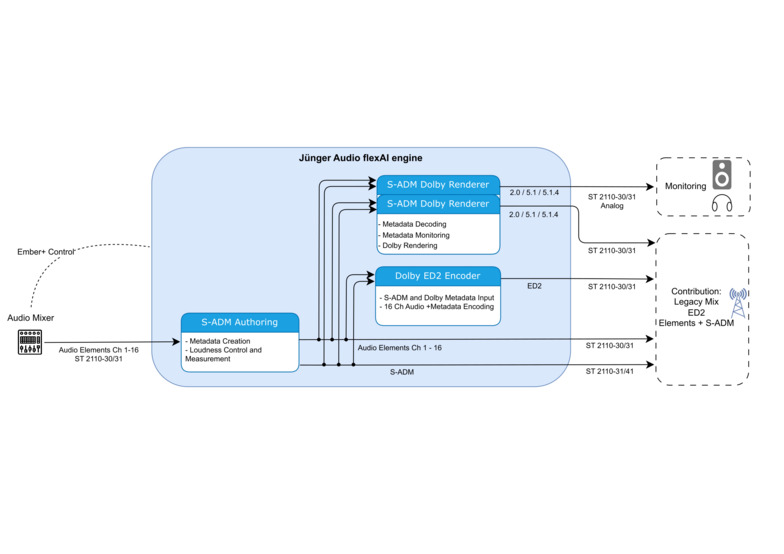

This signal flow demonstrates a typical live use-case where metadata for an object-based NGA production is authored in real-time. This could be in an OB-VAN, production studio, or secondary production unit. The flexAI receives input audio from the local mixer or outside production. This consists of a bed mix and object(s), or several different Complete Main mixes. The flexAI, for example an AIXpressor, is licensed and configured to run one instance of S-ADM Authoring, and three instances of S-ADM renderers. The S-ADM Authoring stage creates the actual S-ADM metadata stream. All of the parameters found in the S-ADM stream can be configured and controlled via Ember+ or from the flexAI’s web-based UI. The loudness level of every audio element can also be optionally controlled using Jünger Audio’s Level Magic algorithm, on an element by element basis. The complete set of input audio channels and the associated S-ADM metadata are then routed to the AIXpressor output(s) to be backhauled to the Broadcast Operation Center, Headend, or Master Control Room. Using SMPTE ST 2110 workflows, the outputs will conform to ST 2110-30, -31, and -41 for the audio and S-ADM transport. The AIXpressor also supports other common “baseband” interfaces for S-ADM workflows. The S-ADM Authoring output is also internally routed to three S-ADM Dolby renderers. The S-ADM renderers display detailed information about audio elements and their properties, which can be used to confirm that the authoring is being performed correctly. The S-ADM Dolby renderer allows for independent selection of a Presentation and an Output Channel Configuration Target Layout, which defines the channel-based output (e.g. 5.1.4, 5.1, or 2.0).

These options allow the three renderers to be used for local monitoring purposes (via headphones or audio outputs), or for rendering to a 5.1 or 2.0 output to be transmitted with a legacy HD broadcast feed.

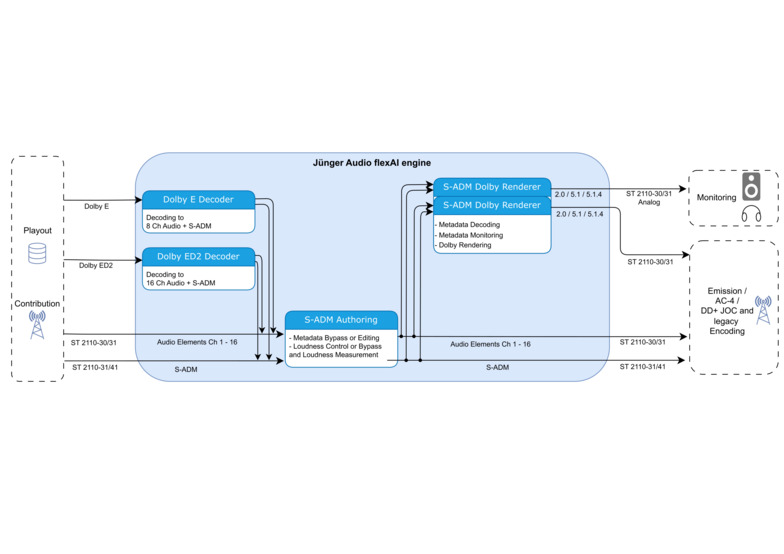

The Live Monitoring and Editing workflow is found downstream from Production, and will typically be located in the Broadcast Operations Center, or Master Control. Its primary purpose is to display the incoming multichannel audio and metadata, and to monitor this combination using a reference renderer which emulates the consumer’s experience. This input content can come from either a live production or from a playback server. The secondary purpose is to edit and modify the incoming S-ADM metadata as necessary, and to generate the updated S-ADM metadata before the final broadcast to the viewer. Use cases for this could be language substitution as content is distributed in different regions of the world. Within the flexAI engine, one S-ADM Authoring and three S-ADM Dolby renderers are instantiated. The S-ADM Authoring stage can either pass-through the incoming S-ADM or capture it. Captured S-ADM metadata can be edited to generate a modified S-ADM stream. Similar to the Live Authoring use-case above, displaying the audio elements and presentation metadata along with loudness measurements allow for full quality control of the object-based NGA production. Each S-ADM Dolby renderer allows for the monitoring of selectable presentations which comply with what the end consumer hears at home. Another purpose of the renderers is to feed legacy emission encoders with compatible formats such as 2.0 or 5.1.

Features of flexAI S-ADM Processors

The Jünger Audio S-ADM Authoring & Monitoring package includes two types of processors that can be used to create a custom signal chain and fulfill the use cases described above. The key features are:

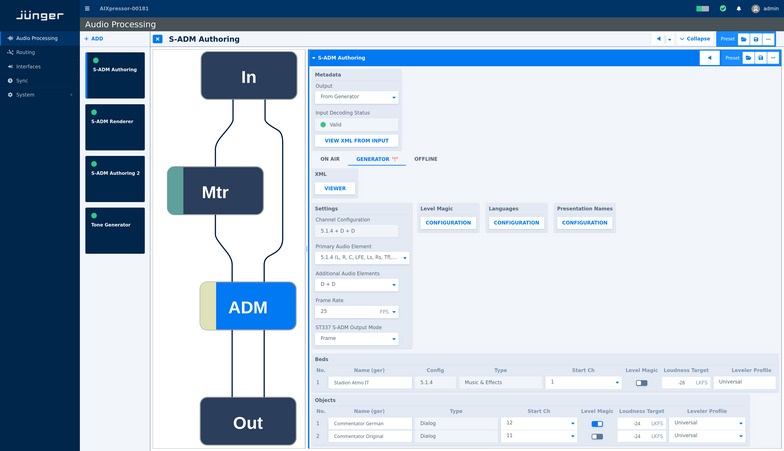

S-ADM Authoring

Authoring features:

-

Channel Configuration, Names and Labels: Setting the combination of bed or multiple channel-based mixes, such as 5.1.4, 5.1 and 2.0, and optional objects. General routing between all processors and input/output interfaces is handled in a central routing. This also allows routing of an SMPTE ST 2110-31 S-ADM input to an ST 2110-41 output. Additionally, the Authoring processor allows input channel assignments. Beds, objects, and presentations can all be named and have editable language labels assigned.

-

Loudness Leveling: The S-ADM Authoring processor includes the configurable Level Magic Algorithm. It can level all elements (bed and objects on an individual basis) to a chosen Loudness Target. This allows control over the resulting loudness of presentations on renderer side. As an example, the sound engineer may use the bed in two different presentations mixed with different objects but cannot actively control the resulting mixes at the same time to ensure a stable loudness. If all components, including the bed and objects, are automatically leveled, an offset in loudness target can be set to ensure that the bed and object work together. This also applies to the mix with the second object, as it is known to be leveled as well.

-

Peak Meter and Loudness Measurement: Loudness measurement (Short-Term, Integrated) of all audio elements separately and of the presentations. Level meter bar graphs show peak information for all 16 audio channels.

-

S-ADM XML Import/Export to allow S-ADM configuration exchange with for example the encoder for fallback purposes.

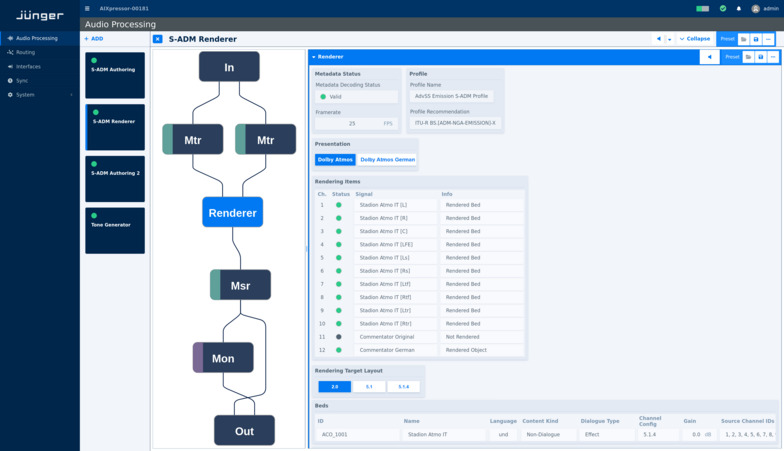

S-ADM Dolby Renderer

Renderer features:

-

Rendering and Monitoring Outputs: To feed loudspeakers and legacy channel-based emission simultaneously, each renderer outputs a set of audio channels that can be adjusted in volume, set to a reference level, or muted. Additionally, a separate set of audio channels is rendered and remains unaffected by these monitor-specific operations, which can be used for legacy emission or fallback purposes. The Target Layouts for the render are 2.0, 5.1 and 5.1.4.

-

Metadata Display: Display of beds, objects and presentations, their Types/Channel Configurations, Gains, and Positions (in case of objects) and others. The processor also provides information, which elements are rendered at the currently selected presentation.

-

Peak Meter and Loudness Measurement: The Input and Output blocks of the processor display Peak Meters. The processor also includes loudness measurement tools such as Short-Term and Momentary Bar Graphs, Integrated and Maximum values of the selected render and a recent measurement display. These tools allow for loudness regulation compliant checks according to ITU-R BS.1770.

Remote Control

Full remote control, configuration, and monitoring of the AIXpressor and other units running flexAI is supported via a web-browser, such as Google Chrome. All parameters of the audio processors can be accessed via Ember+, and presets for the processors and routing configurations can be stored, recalled, and pushed from broadcast scheduling systems. NMOS IS-04 and IS-05 is also supported for discovery and connection control of AoIP streams.